Implicit/Machine learning gender bias

I ran across a headline recently “Amazon scraps secret AI recruiting tool that showed bias against women” that I realized provides a nice example of a few points we’ve been discussing in the lab.

First, I have found myself describing on a few recent occasions that it is reasonable to think of implicit learning (IL) as the brain’s machine learning (ML) algorithm. ML is a super-hot topic in AI and data science research, so this might be a useful analogy to help people understand what we mean by studying IL. We characterize IL as the statistical extraction of patterns in the environment and the shaping of cognitive processing to maximize efficiency and effectiveness to these patterns. And that’s the form of most of the really cool ML results popping up all over the place. Automatic statistical extraction from large datasets that provides better prediction or performance than qualitative analysis had done.

Of course, we don’t know the form of the brain’s version of ML (there are a lot of different computational variations of ML) and we’re certainly bringing less computational power to our cognitive processing problems than a vast array of Google Tensor computing nodes. But perhaps the analogy helps frame the important questions.

As for Amazon, the gender bias result they obtained is completely unsurprising once you realize how they approached the problem. They trained an ML algorithm on previously successful job applicants to try to predict which new applicants would be most successful. However, since the industry and training data was largely male-dominated, the ML algorithm picked up this as a predictor of success. ML algorithms make no effort to distinguish correlation from causality, so they will generally be extremely vulnerable to bias.

But people are also vulnerable to bias, probably by basically the same mechanism. If you work in a tech industry that is male dominated, IL will cause you to nonconsciously acquire a tendency to see successful people as more likely to be male. Then male job applicants will look closer to that category and you’ll end up with an intuitive hunch that men are more likely to be successful — without knowing you are doing it or intending any bias at all against women.

An important consequence of this is that people exhibiting bias are not intentionally misogynistic (also note that women are vulnerable to the same bias). Another is that there’s no simple cognitive control mechanism to make this go away. People rely on their intuition and gut instincts and you can’t simply tell people not to as not doing so feels uncomfortable and unfamiliar. The only obvious solution to this is a systematic, intentional approach to changing the statistics by things like affirmative action. A diverse environment will eventually break your IL-learned bias (how long this takes and what might accelerate it is where we should be looking to science), but it will never happen overnight and will be an ongoing process that is highly likely to be uncomfortable at first.

In theory, it should be a lot quicker to fix the ML approach. You ought to be able to re-run the ML algorithm on a non-biased dataset that equally successful numbers of men and women. I’m sure the Amazon engineers know that but the fact that they abandoned the project instead suggests that the dataset must have been really biased initially. You need a lot of data for ML and if you restrict the input to double the size of the number of successful women, you won’t have enough data if the hiring process was biased in the past (prior bias would also be a likely reason you’d want to tackle the issue with AI). They’d need to hire a whole lot more women — both successful and unsuccessful, btw, for the ML to work — and then retrain the algorithm. But we knew that was the way out of bias before we even had ML algorithms to rediscover it.

Original article via Reuters: https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G

Expertise in Unusual Domains

It’s tempting to call this kind of thing ‘stupid human tricks’ but it’s really awesome human tricks. I’m regularly fascinated by people who have pushed themselves to achieve extremely high levels of skill in offbeat areas. The skill performance her is amazing, clearly thousands of hours of practice.

With a lot of the more traditional skill areas that people push the performance boundaries way out, there is an obvious reward to getting very good. Music, visual arts, sports, even chess, all have big audiences that will reward being among the best in the world. Yoyo-ing has to be a bit different. Maybe I just don’t know the size of the audience, but it seems like the kind of thing you get good at if you think juggling is too mainstream.

Pretty amazing to watch, though.

The “Dan Plan”

I mentioned the Dan Plan awhile ago as a fascinating real-world self experiment on the acquisition of expertise. Dan, the eponyous experimenter and experimentee, quit his job to try to spend 10,000 hours playing golf to see if he could meet a standard of ‘internationally competitive’ defined by winning a PGA tour card — starting from no prior golf experience at all.

I remembered the project awhile back and peeked in on it and it seemed to be going slowly. Then I ran across this write-up in the Atlantic summarizing the project and basically writing it off as a failure. I disagree!

https://www.theatlantic.com/health/archive/2017/08/the-dan-plan/536592/

Data collection in the real-world is really hard and it seems remarkably unfair to Dan to say he “failed” when even this single data point is so interesting. As a TL;DR, he logged around 6000 hours of practice but then got sidetracked by injuries, bogged down by other constraints in life (e.g, making a living) and kind of petered out. To think about what we learned from his experience, let’s review the expertise model implied here.

The idea is that the level of expertise is a function of two components: talent and training (good old nature/nurture) and what we don’t know is the relative impact of each. The real hypothesis being tested is that maybe ‘talent’ isn’t so important, it’s just getting the right kind of training, i.e., “deliberate” training and as a note Ericsson is both cited in the Atlantic article and was consulting actively with Dan through his training (Bob Bjork is also interviewed, although the relationship of his research is more tenuous that suggested, although the article does not properly appreciate how good a golfer he is reputed to be).

The real challenges here are on embedded in understanding the details of both of these constructs. For training, I doubt we really know what the optimal ‘deliberate practice’ is for a skill like golf. Suppose if Dan wasn’t getting the right kind of coaching, or even harder, the right kind of coaching for him (suppose there are different optimal training programs that depend on innate qualities — that makes a right mess out of the simple nature/nurture frame). One of the reason we do lab studies with highly simplified tasks is to make the content issue more tractable and even then, this can be hard.

And the end of the Dan plan due to chronic injury points out an important part of the ‘talent’ question for skills that require a physical performance. Talent/genetic factors can show up as peripheral on central (as in nervous system). For athletes, it is clear that peripheral differences are really important — size, weight, peculiarities of muscle/ligament structure — these all matter. Nobody doubts that there are intrinsic, inherited, genetic aspects in those that can be influenced by exercise/diet but with significant limits. Tendency towards injury is a related and somewhat subtle aspect of this that might act as a big genetic-based factor in who achieves the highest level of performance in physical skills.

Their existence of peripheral differences does not actually tell us much about the relative importance of central, brain-based, individual differences in skill learning, which are closer to questions we try to examine in the lab. It could be the case that there are some people who learn more from each practice repetition and as a result, achieve expertise more quickly (fwiw, we are failing to find this, although we are looking for it). But if any novice can get to high-level expertise in 10,000 hours, then maybe that is not as big a factor.

In my estimation, Dan got pretty far in his 6k hours. And even if he wasn’t able to fully test the core hypothesis due to injuries, it’d be great if this inspired some other work along this line. Maybe somebody would invest a few million in grant dollars into recruiting a bunch more people like Dan to spend ~5 years full-time commitment to various cognitive or physical skills, see what the learning curves are like, and get some more data on the relative importance of talent and time in expertise.

Leela Chess

Courtesy of Jerry (ChessNetwork), I found out today about Leela and the LCzero chess project (http://lczero.org/).

This appears to be a replication of the Google DeepMind AlphaZero project with open source and distributed computing contributing to the pattern learner.

Among the cool aspects of the project is that you can play against the engine after different amounts of learning. It’s apparently played around 2.5m games now and appears to have achieved “expert” strength.

There are a few oddities, though. First, if it is learning through self-play, are these games versus humans helping it learn (that is, are they used in the learning algorithm)? Probably practically that makes no difference if most of the training is from real self-play, but I’m curious. Second, they report a graph of playing ability of the engine but the ELO is scaling to over 4k, which doesn’t make any sense (‘expert’ elo is around 2000). There really ought to be a better way to get a strength measure, for example, simply playing a decent set of games on any one of the online chess sites (chess.com, lichess.com). The ability to do this and the stability of chess elo measures is a good reason to use chess as a model for exploring pattern-learning AI compared to human ability.

But on the plus side, there is source code. I haven’t looked at the github repository yet (https://github.com/glinscott/leela-chess) but it’d be really interesting to constrain the alpha-beta search to something more human like (narrow and shallow) and see if it possible to play more human-like.

Jerry posted a video on youtube of playing against this engine this morning (https://www.youtube.com/watch?v=6CLXNZ_QFHI). He beats it in the standard way that you beat computers: play a closed position where you can gradually improve while avoiding tactical errors and the computer will get ‘stuck’ making waiting moves until it is lost. Before they started beating everybody, computer chess algorithms were known to be super-human at tactical considerations and inferior to humans at positional play. It turns out that if you search fast and deep enough, you can beat the best humans at positional play as well. But the interesting question to me for the ML-based chess algorithms is whether you can get so good at the pattern learning part that you can start playing at a strong human level with little to no explicit alpha-beta search (just a few hundred positions rather than the billions Stockfish searches).

Jerry did note in the video that the Leela engine occasionally makes ‘human like’ errors, which is a good sign. I think I’d need to unpack the code and play with it here in the lab to really do the interesting thing of figuring out the shape of the function, ability = f(million games played, search depth).

Adventures in data visualization

If you happen to be a fan of data-driven political analysis, you are probably also well aware of the ongoing challenge of how to effectively and accurately visualize maps that show US voting patterns. The debate over how to do this has been going on for decades but was nicely summarized in a 2016 article by the NYTImes Upshot section (https://www.nytimes.com/interactive/2016/11/01/upshot/many-ways-to-map-election-results.html).

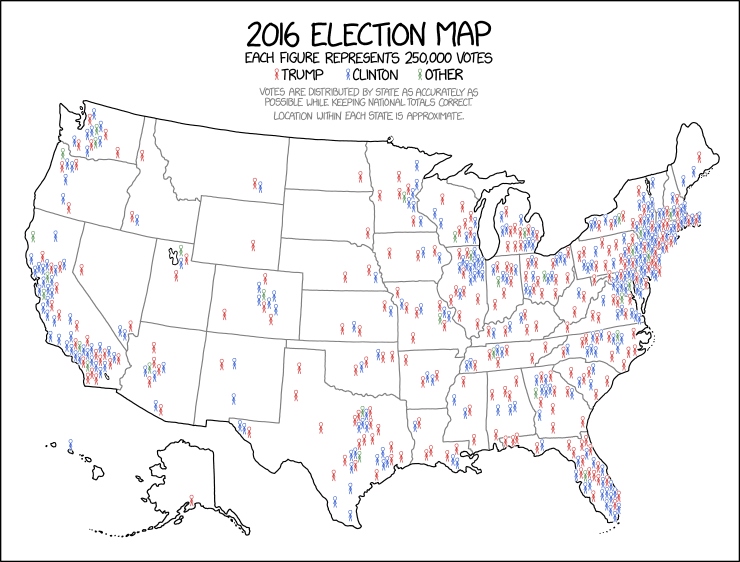

Recently, Randall Munroe of XKCD fame came up with a version that is really nicely done version:

I was alerted to it by high praise for the approach from Vox:

https://www.vox.com/policy-and-politics/2018/1/8/16865532/2016-presidential-election-map-xkcd

Of particular note to me was that a cartoonist (albeit one with a strong science background) was the person who found the elegant solution to the density/population tradeoff issue across the US while also capturing the important mixing aspects (blue and red voters in every state). That isn’t meant as a knock on the professional political and data scientists who hadn’t come with this approach, but more of a note on how hard data visualization really is and how the best, creative, effective solutions might therefore come from surprising sources.https://xkcd.com/1939/

AlphaZero Beats Chess In 4 (!?) hours

Google’s DeepMind group updated their game learning algorithm, now called AlphaZero, and mastered chess. I’ve seen the game play and it elegantly destroyed the previous top computer chess-playing algorithm (the computers have been better than humans for about a decade now), Stockfish. Part of what is intriguing about their claim is that the new algorithm leans entirely from self-play with no human data involved — plus the learning process is apparently stunningly fast this way.

Something is weird to me about the training time they are reporting, though. Key things we know about how AlphaZero works:

- Deep convolutional networks and reinforcement learning. This is a classifier-based approach that is going to act the most like a human player. One way to think about this is if you could take a chess board and classify it as a win for white, win for black, or draw (with a perfect classifier algorithm). Then to move, you simply look at the position after each of your possible moves and pick the one that is the best case for your side (win or draw).

- Based on the paper, the classifier isn’t perfect (yet). They describe using a Monte-Carlo tree search (MCTS), which is how you would use a pretty good classifier. Standard chess algorithms rely more on Alpha-Beta (AB) tree search. The key difference is that AB search is “broader” and searches every possible move, response move, next move, etc. as far as it can. The challenge for games like Chess (and even more for Go) is that the number of positions to evaluate explodes exponentially. With AB search, the faster the computers, the deeper you can look and the better the algorithm plays. Stockfish, the current world champ, was searching 70m moves/sec for the match with AlphaZero. MCTS lets you search smarter, more selectively, and only check moves that current position makes likely to be good (which is what you need the classifier for). AlphaZero is reported at searching only 80k moves/sec, about a thousand times fewer than Stockfish.

That all makes sense. In fact, this approach is one we were thinking about in the early 90s when I was in graduate school at CMU talking about (and playing) chess with Fernand Gobet and Peter Jansen. Fernand was a former chess professional, retrained as a Ph.D. in Psychology and doing a post-doc with Herb Simon. Peter was a Computer Science Ph.D. (iirc) working on chess algorithms. We hypothesized that it might be possible to play chess by using the patterns of pieces to predict the next move. However, it was mostly idle speculation since we didn’t have anything like the classifier algorithms used by DeepMind. Our idea was that expert humans have a classifier built from experience that is sufficiently well-trained that they can do a selective search (like MCTS) of only a few hundred positions and manage to play as well as an AB algorithm that looked at billions of positions. It looks like AlphaZero is basically this on steroids – a better classifier, more search and world champion level performance.

The weird part to me is how fast it learned without human game data. When we were thinking about this, we were going to use a big database of grandmaster games as input to the pattern learner (a pattern learner/chunker was Fernanad’s project with Herb). AlphaZero is reported as generating its own database of games to learn from by ‘playing itself’. In the Methods section, the number of training games is listed at 44m. That looks way too small to me. If you are picking moves randomly, there are more than 9m positions after 3 moves and several hundred billion positions after 4 moves. AlphaZero’s training is described as being in up to 700k ‘batches’ but even if each of those batches has 44m simulations, there’s nowhere near enough games to explore even a decent fraction of the first 10 or so moves.

Now if I were training a classifier as good as AlphaZero, what I would do is to train it against Stockfish’s engine (the previous strongest player on the planet) for at least the first few million games, then later turn it loose on playing itself to try to refine the classifier further. You could still claim that you trained it “without human data” but you couldn’t claim you trained it ‘tabula rasa’ with nothing but the rules of chess wired in. So it doesn’t seem that they did that.

Alternately, their learning algorithm may be much faster early on than I’d expect. If it effectively pruned the search space of all the non-useful early moves quickly, perhaps it could self-generate good training examples. I still don’t understand how this would work, though, since you theoretically can’t evaluate good/bad moves until the final move that delivers checkmate. A chess beginner who has learned some ‘standard opening theory’ will make a bunch of good moves, then blunder and lose. Learning from that game embeds a ‘credit assignment’ problem of identifying the blunder without penalizing the rating of the early correct moves. That kind of error is going to happen at very high rates in semi-random games. Why doesn’t it require billions or trillions of games to solve the credit assignment problem?

Humans learn chess in many fewer than billions of games. A big part of that is coming from directed (or deliberate) practice from a teacher. The teacher can just be a friend who is a little better at chess so that the student’s games played are guided towards the set of moves likely to be good and then our own human machine learning algorithm (implicit learning) can extract the patterns and build our classifier. The numbers reported on AlphaZero sound to me like it should have had a teacher. Or there are some extra details about the learning algorithm I wish I knew.

But what I’d really like is access to the machine-learning algorithm to see how it behaves under constraints. If our old hypothesis about how humans play chess is correct, we should be able to use the classifier and reduce the number of look-ahead evaluations to a few hundred (or thousand) and it should play like a lot more like a human than Stockfish does.

Links to the AlphaZero reporting paper:

https://arxiv.org/abs/1712.01815v1

https://arxiv.org/pdf/1712.01815v1.pdf

Cognitive Symmetry and Trust

A chain of speculative scientific reasoning from our work into really big social/society questions:

- Skill learning is a thing. If we practice something we get better at it and the learning curve goes on for a long time, 10,000 hours or more. Because we can keep getting better for so many hours, nobody can really be a top-notch expert at everything (there isn’t time). This is, therefore, among the many reasons why in group-level social functioning it is much better to specialize and have multi-person projects done by teams of people specializing in component steps (for tasks that are repeated regularly). The economic benefits of specialization are massive and straightforward.

- However, getting people to work well in teams is hard. In most areas requiring cooperation, there is the possibility of ‘defecting’ instead of cooperating on progress – to borrow terms from the Prisoner’s Dilemma formalism. That powerful little bit of game theory points out that in almost every one-time 2-person interaction, it’s always better to ‘defect,’ that is, to act in your own self-interest and screw over the other player.

- Yet, people don’t. In general, people are more altruistic than overly simple game-theory math would predict. Ideas for why that model is wrong include (a) extending the model to repeated interactions where we can track our history with other players and therefore cooperation is rewarded by building a reputation; (b) that humans are genetically prewired for altruism (e.g., perhaps by getting internal extra reward from cooperating/helping); or (c) that social groups function by incorporating ‘punishers’ who provide extra negative feedback for the non-cooperators to reduce non-cooperation.

- These three alternatives aren’t mutually exclusive, but further consideration of the (3a) theory raises some interesting questions about cognitive capacity. We interact a lot with a lot of different people in our daily lives. Is it possible to track and remember everything about our interactions in order to make optimal cooperate/defect decisions? Herb Simon argued (Science, 1980) that we can’t possibly do this, working along the same lines as his ‘bounded rationality’ reasoning that won him the Nobel Prize in Economics. His conclusion was that (3b) was more likely and showed that if there was a gene for altruism (he called it ‘docility’), it would breed into the population pretty effectively.

- No such gene has yet been identified and I have spent some time thinking about alternate approaches based on potential cognitive mechanisms for dealing with the information overload of tracking everybody’s reputation. One really interesting heuristic I ran is the Symmetry Hypothesis, which I have slightly recast for simplicity. This idea is a hack to the PD where you can reason very simply as follows: If the person I am interacting with is just like me and reasons exactly as I do, no matter what I decide, they are going to do the same and in this case, I can safely cooperate because the other player will too. And if I defect, they will also (potentially allowing group social gains through competition, which is a separate set of ideas).

- Symmetry would apply in cases where the people you often interact with are cognitively homogeneous, that is, where everybody thinks ‘we all think alike.’ Here, where ‘we’ can be any social group (family, neighborhood, community, church, club, etc.). If this is driving some decent fraction of altruistic behavior, you’d see strong tendencies for high levels of in-group trust (compared with out-group), and particularly in groups that push people towards thinking similarly. You clearly do see those things, but their existence doesn’t actually test the hypothesis – there are many theories that predict in-group/out-group formation, that these affect trust, that people who identify in a group start to think similarly. Of note, though, this idea is a little pessimistic because it suggests that groupthink leads to better trust and social grouping should tend to treat novel, independent thinkers poorly.

- Testing the theory would require data examining how important ‘thinks like me’ is to altruistic behavior and/or how important cognitive homogeneity is to existing strong social groups/identity. This is a potential area of social science research a bit outside our expertise here in the lab.

- But if true, the learning-related question (back to our work) is whether a tendency to rely on symmetry can be learned from our environment. I suspect yes, that feedback from successful social interactions would quickly reinforce and strengthen dependency on this heuristic. I think that this could cause social groups to become more cognitively homogeneous in order to be more effectively cohesive. Cognitively homogeneous groups would have higher trust, cooperate better and be more productive than non-homogeneous groups, out-competing them. This could very well create a kind of cultural learning that would persist and look a lot like a genetic factor. But if it was learned (rather than prewired), that would suggest we could increase trust and altruism beyond what we currently see in the world by learning to allow more diverse cognitive approaches and/or learning to better trust out-groups.

I was moved to re-iterate this chain of ideas because it came up yet again in conversational drive into politics among people in the lab. Although our internal debates usually center around how different groups treat the out-groups and why. Yesterday, the discussion started with observing that people we didn’t agree with seemed often to be driven by fear/distrust/hate of those in their out-groups. However, it was not clear that if you didn’t feel that way, whether you had managed to see all of humanity as your in-group or instead had found/constructed an in-group that avoided negative perception of the out-groups. We did not come to a conclusion.

FWIW, this line of thinking depends heavily on the Symmetry idea, which I discovered roughly 10 years ago via Brad DeLong’s blog (http://delong.typepad.com/sdj/2007/02/the_symmetry_ar.html). According to the discussion there, it is also described as the Symmetry Fallacy and not positively viewed among real decision scientists. I have recast it slightly differently here and suspect that among the annoying elements is that I’m using an underspecified model of bounded rationality. That is, for me to trust you because you think like me, I’m assuming both of us have slightly non-rational decision processes that for unspecified reasons come to the same conclusion that we are going to trust each other. Maybe there’s a style issue where a cognitive psychologist can accept a ‘missing step’ like this in thinking (we deal with lots of missing steps in cognitive processing) where a more logic/math approach considers that anathema.

Evidence and conclusions

I think this should be the last note on this topic for awhile, but since it’s topical a new piece of data popped up related to possible sources of gender outcome differences in STEM-related fields.

The new piece of data was reported in the NY Time Upshot section, titled “Evidence of a Toxic Environment for Women in Economics”

The core finding is that on an anonymous forum used by economists (grads, post-doc, profs) there are a lot of negatively gendered associations for posts in which the author makes explicitly gendered reference. In general, posts about men are more professional and posts about women are not (body-based, sex-related and generally sexist). Note that “more” here is done as a likelihood ratio, mathematically defined but the effect size is not trivially extracted. Because we always like to review primary sources, I dug up the source, which has a few curious features.

First, it’s an undergraduate thesis, not yet peer-reviewed, which is an unusual source. However, I looked through it and it is a sophisticated analysis that looks to be done in a careful, appropriate and accurate way (mainly based on logistic regression). I read through it a bit and the method looks strong, but is complex enough that there could be an error hiding in some of the key definitions and terms.

Paper link:

https://www.dropbox.com/s/v6q7gfcbv9feef5/Wu_EJMR_paper.pdf?dl=0

Of note, the paper seems to be a careful mathematical analysis of something “everybody knows” which is that anonymous forums frequently include high rates of stereotype bias against women and minorities. But be very careful with results that confirm what you already know, that’s how confirmation bias works. In addition, economists may not be an effective proxy for all STEM fields. I don’t know of a similar analysis for Psychology, for example.

But as an exercise, lets consider the possibility that the analysis is done correctly and truly captures the fact that there are some people in economics who treat women significantly differently than they treat men, i.e., that their implicit bias affects the working environment. So we have 3 data points to consider.

- There are fewer women in STEM fields than men

- There are biological differences between men and women (like height)

- There are environmental differences that may affect women’s ability to work in some STEM fields

The goal, as a pure-minded scientist is to understand the cause of (1). Why are there fewer women in STEM? The far-too-frequent inference error is when people (like David Brooks) take (2) as evidence that (1) is caused by biological differences. That’s simply an incorrect inference.

It turns out to be helpful to know about (3) but only because it should reduce you to less certainty that (2) implies (1). It’s still critical to realize that we do not know that environment causes (1) either. All we know is that we have multiple hypotheses consistent with the data and we don’t know.

What we do know is that (3) is objectively bad socially. Even if (2) meant there were either mean or distributional differences between men and women, the normal distribution means there are still women on the upper tail and if (3) keeps them out of STEM, that hurts everybody.

The googler’s memo assumes (2) and reinforces (3), which is clearly and objectively a fireable offense.

See the problem yet?

The entirely predictable backlash against Google for firing the sexist manifesto author has begun. Among the notable contributors is the NY Time Editorial page in the form of David Brooks. In support of his position that the Google CEO should resign, he’s even gone so far as to dig up some evolutionary psych types to assert that men and women do, indeed, differ and therefore the author was on safe scientific ground.

The logical errors are consistent and depressing. Nobody is arguing that men and women don’t differ on anything. The question is whether they differ on google-relevant work skills. Consider the following 2 fact-based statements:

- Men are taller than women

- Women are more inclined towards interpersonal interactions

There is data to support both statements on aggregate and statistically across the two groups. The first statement is clearly a core biological difference with a genetic basis (but irrelevant to work skills at Google). However, the fact that (1) has a biological basis does not mean the second statement does. The alternative hypothesis is that (2) has arisen from social and cultural conditions, not something about having XX or XY genes (or estrogen/androgen). The question is between these two statements:

2a. Women are more inclined towards interpersonal interactions because of genetic differences

2b. Women are more inclined towards interpersonal interactions because they have learned to be

And while statement 2 is largely consistent with observations (e.g., survey data on preferences), we have no idea at all which of 2a or 2b is true (or even if the truth is a blend of both). Just because an evo psych scientist can tell a story about how this could have evolved does not make it true either, it just means 2a is plausible (evo psych cannot be causal). It’s unambiguous that 2b is also plausible. There simply isn’t data that clearly distinguishes one versus the other and anybody who tells you otherwise is simply exhibiting confirmation bias.

And anybody even considering that the firing was unjust needs to read the definitive take-down of the manifesto by a former Google engineer, Yonatan Zunger, who does not even need to consider the science, just the engineering:

https://medium.com/@yonatanzunger/so-about-this-googlers-manifesto-1e3773ed1788

His core point is that software engineering at Google is necessarily a team-based activity, which (a) means the social skills the manifesto author attributes to women are actually highly valuable and (b) the manifesto author now no longer has any prospects for being able to be part of a team at Google because he has asserted that many colleagues are inferior (and many others will be unhappy they’d even have to argue the point with him). Given that any of these skills is likely present in a normal distribution across the population and that Google selectively hires from the high end for both men and women, even if his pseudoscience was correct, you’d still have to fire him to maintain good relationships with the women you’ve already hired from the very high end of the skill distribution.

It’s kind of amazing how bad so many people are at basic logic once a topic touches their implicit bias. Also depressing, too.

Anti-diversity “science”

Somebody at Google wrote a memo/manifesto arguing against diversity (mainly gender), caused something of a ruckus and got himself fired. The author was clearly either trying to get terminated (as a martyr) or simply not very bright. A particularly articulate explanation of why it is necessary to fire somebody who did what he did is here (TL;DR the memo author doesn’t seem to understand very important things about engineering or being part of a company that has engineering teams):

https://medium.com/@yonatanzunger/so-about-this-googlers-manifesto-1e3773ed1788

There are a number of interesting things about the whole episode, but one that we’ve had pop up in the lab recently in discussion is when it is possible for science to be ‘dangerous.’ The memo provides a convenient example since an early section attempts to wrap assumptions of biologically-driven gender differences in a thin veneer of science. It’s a particularly poorly done argument, which I think makes it easier to see the overall inference flaws.

The argument is something like:

Men and women differ on X due to innate, biological and immutable differences (feel free to throw “evolutionary” in there as well if you’d like). Men and women also differ on Y, which therefore must also be due to innate and immutable biological causes.

That there exists some value of X that make the first statement true (e.g., the number of X chromosomes) is not really worth arguing about. It should be obvious that you can’t assert the second statement regardless. I usually frame it as a reminder to consider the alternate hypothesis, which we can state here as “Men and women differ on Z, which is due to cultural and environmental differences.” Cross-cultural studies of gender differences make it unambiguously clear that there are values of Z that make this third statement true as well.

So what do we do about the middle statements, for values of Y for which we do not know if they are based mainly on nature or nurture? Well, for one thing, we don’t make policy statements based on them.

For another, though, we’d like to do science that tackles difficult and thorny issues like nature vs nurture, individual differences, stability of personality measures, effects of education, culture and environment. But how do we do science, which is often messy and even unstable on the cutting edge, when there are ideologically minded individuals waiting to seize on preliminary findings to drive a political agenda?

I don’t actually know. And that bothers me a fair amount.

If you doubt the danger inherent here, consider that you can make a pretty good case that the current president of the US is largely in place due to exactly this kind of bad, dangerous science. The alt-right, which probably moved the needle enough to swing the very close election, is a big fan of genetic theories of IQ, especially ones that support the assumption that the privileged deserve all their advantages. So they are highly invested in the discredited book The Bell Curve and the type of argument that got Larry Summer’s fired from Harvard (the ‘fat tails’ hypothesis of gender differences). These ideas are generally focused through the lens of Ayn Rand’s Objectivism which asserts the moral necessity of rule of the privileged over the masses — which is, fwiw, pretty well reflected in the googler’s memo as well.